Hey Alexa, Who Am I Talking to?: Analyzing Users’ Perception and Awareness Regarding Third-party Alexa Skills

Overview

OverviewAbstract

The use of voice-control technology has become mainstream and is growing worldwide. While voice assistants provide convenience through automation and control of home appliances, the open nature of the voice channel makes voice assistants difficult to secure. As a result voice assistants have been shown to be vulnerable to replay attacks, impersonation attacks and inaudible voice commands. Existing defenses do not provide a practical solution as they either rely on external hardware (e.g., motion sensors) or work under very constrained settings (e.g., holding the device close to a user’s mouth). We introduce the concept of using a gesture-based authentication system for smart home voice assistants called HandLock, which uses built-in microphones and speakers to generate and sense inaudible acoustic signals to detect the presence of a known (i.e., authorized) hand gesture. Our proposed approach can act as a second-factor authentication (2-FA) for performing specific sensitive operations like confirming online purchases through voice assistants. Through extensive experiments involving 45 participants, we show that HandLock can achieve on average 96.51% true-positive-rate (TPR) at the expense of 0.82% false-acceptance-rate (FAR). We perform a comprehensive analysis of HandLock under various settings to showcase its accuracy, stability, resilience to attacks, and usability. Our analysis shows that HandLock can not only successfully thwart impersonation attacks, but can do so while incurring very low overheads and is compatible with modern voice assistants

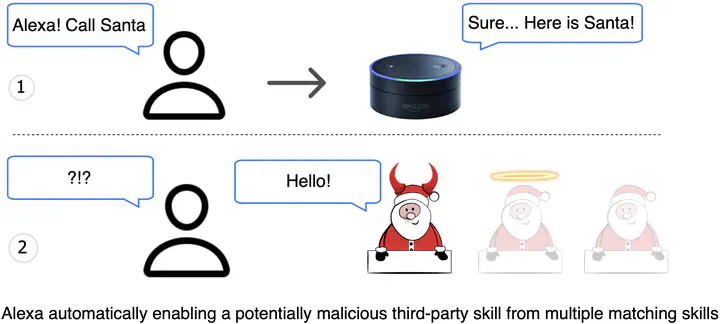

Voice personal assistants such as Amazon Alexa have become very popular and have become an important source of infotainment in households around the world. However, voice assistants comes with their own privacy and security challenges concerning its users. Amazon Alexa allows third-party applications known as “Skills” to run on top of Alexa to further extend Alexa’s capability. However, as multiple skills can share the same invocation phrase and request access to sensitive user data, growing security and privacy concerns surround third-party skills.

The purpose of this project is to study the availability and effectiveness of existing security indicators or a lack thereof to help users properly comprehend the risk of interacting with different types of skills. We conduct an interactive user study (inviting active users of Amazon Alexa) where participants listen to and interact with real-world skills using the official Alexa app.

We find that most participants fail to identify the skill developer correctly (i.e., they assume Amazon also develops the third-party skills) and cannot correctly determine which skills will be automatically activated through the voice interface. We also propose and evaluate a few voice-based skill type indicators, showcasing how users would benefit from such voice-based indicators.